Yesterday Matthew Inman (sole proprietor of the generally hilarious webcomic The Oatmeal) put up a post on his site to help raise funds to buy Tesla’s lab, Wardenclyffe Tower, preserve it, and make it into a Tesla Museum. At the time I’m writing this, 10,900 people have committed a total of $480,00 dollars to help make this happen.

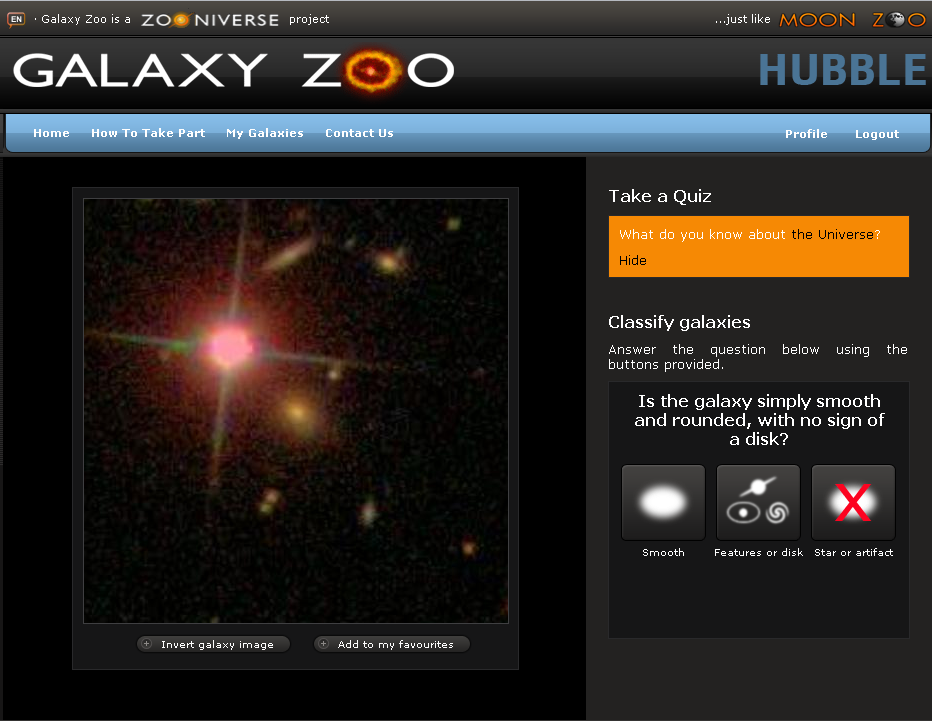

I think folks who work at libraries, archives and museums need to pay attention to this. In particular, people who work at libraries, archives and museums that have a science and technology focus need to pay attention to this.

The Oatmeal and Tesla as the Geek of Geeks

If you don’t follow The Oatmeal you should, it’s a fun comic. If you do, you’ll know that Inman recently posted a funny and exuberant ode to Nikola Tesla as the geek of all geeks. It’s a story about an obsessive desire to make the world a better place through science and technology. (If you check that story out you should also check out this response from Alex Knapp and Inman’s critique of the critique.) The original cartoon uses Tesla to define what being a geek is. I like the sincerity in this particular quote at the front of it.

Geeks stay up all night disassembeling the world so they can put it back together with new features.

They tinker and fix things that aren’t broken.

Geeks abandon the world around them because they are busy soldering together a new one.

For someone who cares about the history of science and technology and the preservation and interpretation the cultural record of science and technology it is neat to see this kind of back and forth happening on the web. With that said, it is unbelievably exciting to see what happens when that kind of geeky-ness can be turned into a firehose of funding to support historic preservation.

How is this so amazingly successful?

As cultural heritage organizations get into the crowdfunding world it makes a lot of sense to study what about this is working so well. While one might not have the kind of audience Inman has, part of why he has that audience is that he’s a funny guy and he knows how to create something that people want to talk about all over the web. Even the name of the project,Let’s Build a Goddamn Tesla Museum, is funny. It is also participatory in the name alone. He is asking us to be a part of something. He is asking us to help make this happen.

Shortly after going up there were posts about this on a range of major blogs. It’s a great story and Inman is already a big deal on the web. Most importantly, Inman’s fans are the kind of people that can get really excited about supporting this particular cause. Aside from that, he publicly called out a series of different organizations that might get involved as sponsors. At least one of which was excited to sign on personally. Aside from getting the folks who were interested to just give money, he also asked them to reach out to the organizations. It just so happened that someone who has both Inman’s email address and the head of Tesla moters was thrileld to have the ouppertunity to connect the dots and help make this thing happen. The project not only mobilizes supporters, it mobilizes people to mobilize supporters and in so doing lets everybody be a part of the story of making this thing happen.

Is this just a one off thing?

So Inman has been able to turn his web celebratory into a huge boon for a particular cultural heritage site. The next question in my mind is, is this a one time thing? I think there is a good reason to belive that this is actualy replicable in a lot of instances.

First off, Inman’s love for science and his audiance’s love for science isn’t an oddity. The web is full of science and tech fans and other web celebratories who might be game for doing this kind of thing to connect with fans and help support worthy causes.

Off the top of my head, here are three people I think could and very likely would, be up for this sort of thing for other projects related to scientists and engineers.

Jonathan Coulton

I would hazzard to guess that Jonathan Coulton fans would be thrilled to support at some archive to accession and digitize and make avaliable parts of Benoit Mandelbrot’s personal papers. Not sure exactly who has those papers but I am sure they are awesome, and I would hazzard to guess that the man who wrote an ode to the Mandelbrot Set and the fans who love it would come out in droves to support preserving his legacy.

If you haven’t heard Coulton sing the song take a minute and listen to it.

When you get to the end, you find the kind of sincerity about the possibility of science making our world a better place.

You can change the world in a tiny way

And you’re just in time to save the day

Sweeping all our fears away

You can change the world in a tiny way

Go on, change the world in a tiny way

Come on, change the world in a tiny way

We can change the world in a tiny way, and that is a message that Coulton’s fans want to hear. It’s really the same message for Inman’s geeks who are taking apart and rebuilding the world with new features.

Randall Munroe

I would similarly hazzard to guess that XKCD fans would follow Randall in any given campaign he wanted to start around a scientist or a technologist. You can see the same enthusiasm for science and technology in a lot of the XKCD comics. Here are a few of my favorites. For a sense of what people will do based on XKCD comics I would suggest reading the section on “Inspired Activities” on XKCD’s Wikipedia article.

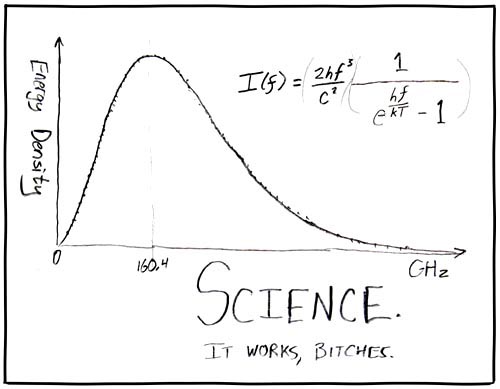

For starters, there is the ever popular “Science: It Works” comic.

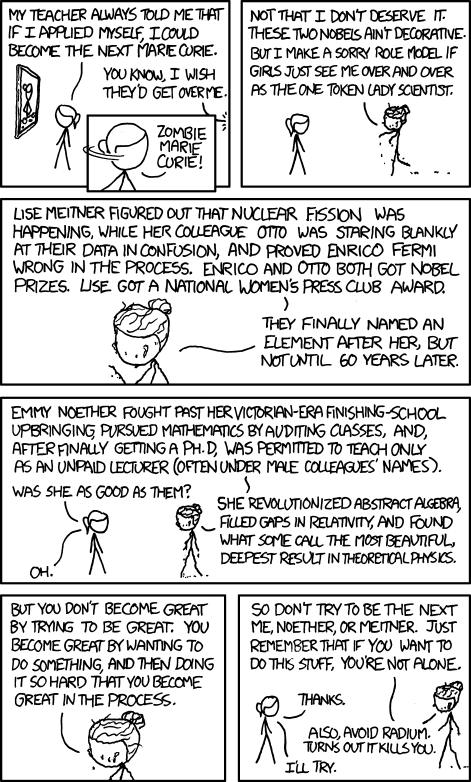

For a specific example of actual scientists check out this Zombie Curie comic.

Kate Beaton

Kate Beaton makes funny, clever, and rather nice looking historical comics. Many of those comics, like the comic about Rosalind Franklin below, are about scientists. I would hazard to guess that her fans would follow her to support these kinds of projects as well.

So these were just a few examples of other folks that I think could potentially pull this kind of thing off. I could also imagine all three being up for this sort of thing. In all three cases, you have geeks who have been able to do their long tail thing and find the other folks that geek out about the same kinds of things.

As a result, I think we could be looking at something that has the makings of a model for libraries, archives and museums to think about. Who has an audience and the idealism to help champion your cause? The web is full of people who care about science. Just take a look at what happened when someone remixed Carl Sagan’s cosmos into a song. There are some amazing people out there making a go of a career by targeting geeky niches on the web. If they are up for helping, I think they have a lot to offer. I’m curious to hear folks thoughts about how these kinds of partnerships might be brokered. What can we do to help connect these dots?