Libraries, archives and museums have a long history of participation and engagement with members of the public. In my last post, I charted some problems with terminology, suggesting that the cultural heritage community can re-frame crowdsourcing as engaging with an audience of committed volunteers. In this post, get a bit more specific about the two different activities that get lumped together when we talk about crowdsourcing. I’ve included a series of examples and a bit of history and context for good measure.

For the most part, when folks talk about crowdsourcing they are generally talking about two different kinds of activities, human computation and the wisdom of crowds.

Human Computation

Human Computation is grounded in the fact that human beings are able to process particular kinds of information and make judgments in ways that computers can’t. To this end, there are a range of projects that are described as crowdsourcing that are anchored in the idea of treating people as processors. The best way to explain the concept is through a few examples of the role human computation plays in crowdsourcing.

ReCaptcha is a great example of how the processing power of humans can be harnessed to improve cultural heritage collection data. Most readers will be familiar with the little ReCaptcha boxes we fill out when we need to prove that we are in fact a person and not an automated system attempting to login to some site. Our ability to read the strange and messed up text in those little boxes proves that we are people, but in the case of ReCaptcha it also helps us correct the OCR’ed text of digitized New York Times and Google Books transcripts. The same capability that allows people to be differentiated from machines is what allows us to help improve the full text search of the digitized New York Times and Google Books collections.

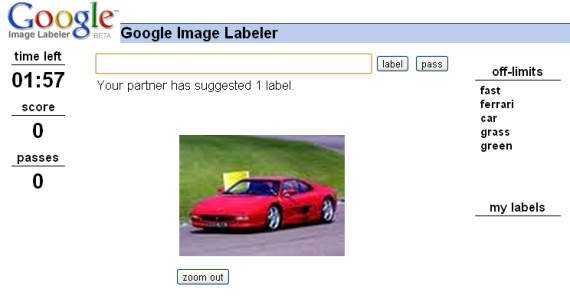

The principles of human computation are similarly on display in the Google Image Labeler. From 2006-2011 the Google image labeler game invited members of the public to describe and classify images. For example, in the image below a player is viewing an image of a red car. Somewhere else in the world another player is also viewing that image. Each player is invited to key in labels for the image, with a series of “off-limits” words which have already been associated with the image. Each label I can enter which matches a label entered by the other player results in points in the game. The game has inspired an open source version specifically designed for use at cultural heritage organizations. The design of this interaction is such that, in most cases, it results in generating high quality description of images.

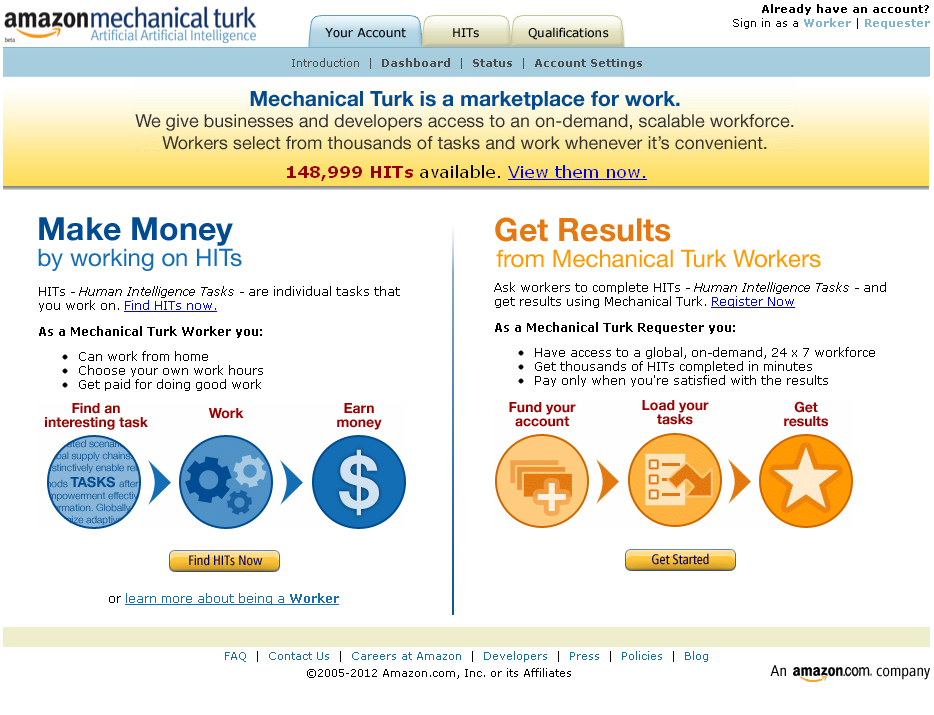

Both the image labeler and ReCaptcha are fundamentally about tapping into the capabilities of people to process information. Where I had earlier suggested that the kind of crowdsourcing I want us to be thinking about is not about labor, these kinds of human computation projects are often fundamentally about labor. This is most clearly visible in Amazon’s Mechanical Turk project.

The tagline for Mechanical Turk is that it “gives businesses and developers access to an on-demand, scalable workforce” where “workers select from thousands of tasks and work whenever it’s convenient.” The labor focus of this site should give pause to those in the cultural heritage sector, particularly those working for public institutions. There are very legitimate concerns about this kind of labor as serving as a kind of “digital sweatshop.”

The tagline for Mechanical Turk is that it “gives businesses and developers access to an on-demand, scalable workforce” where “workers select from thousands of tasks and work whenever it’s convenient.” The labor focus of this site should give pause to those in the cultural heritage sector, particularly those working for public institutions. There are very legitimate concerns about this kind of labor as serving as a kind of “digital sweatshop.”

While there are legitimate concerns about the potentially exploitive properties of projects like Mechanical Turk, it is important to realize that many of the same human computation activities which one could run through Mechanical Turk are not really the same kind of labor when they are situated as projects of citizen science.

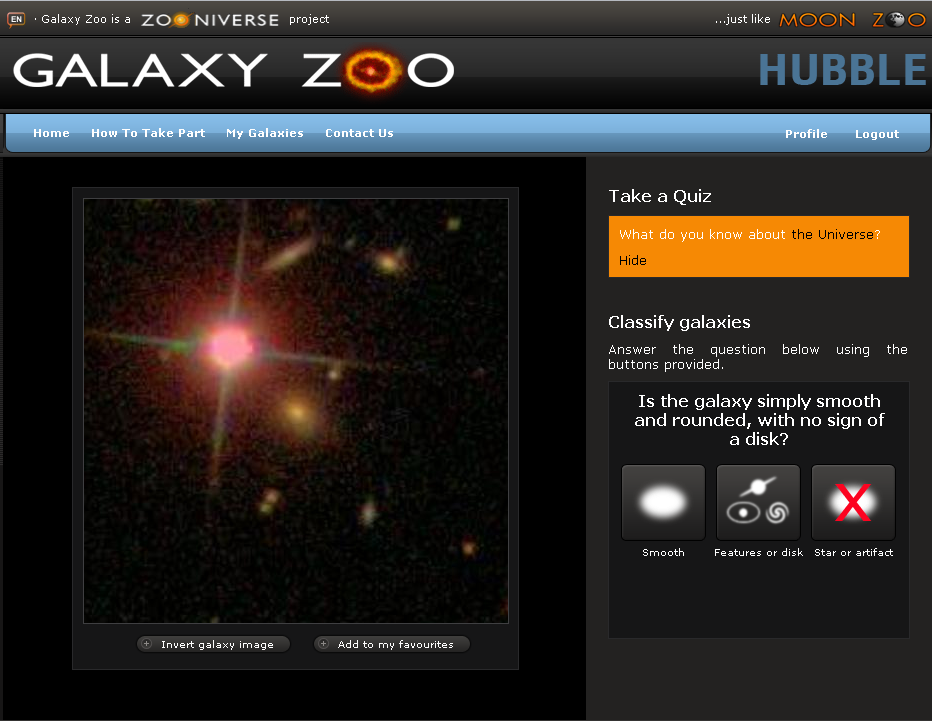

For example, Galaxy Zoo invites individuals to identify galaxies. The activity is basically the same as the Google image labeler game. Users are presented with an image of a galaxy and invited to classify it based on a simple set of taxonomic information. While the interaction is more or less the same the change in context is essential.

Galaxy Zoo invites amateur astronomers to help classify images of galaxies. While the image identification task here is more or less the same as the image identification tasks previously discussed, at least in the early stages of the project, this site often gave amateur astronomers the first opportunity to see these stellar objects. These images were all captured by a robotic telescope, so the first galaxy zoo participants who looked at these images were actually the first humans ever to see them. Think about how powerful that is.

In this case, the amateurs who catalog these galaxies did so because they want to contribute to science. Beyond engaging in this classification activity, the Galaxy Zoo project also invites members to discuss the galaxies in a discussion forum. This discussion forum ends up representing a very different kind of crowdsourcing, one based not so much on the idea of human computation but instead on a notion which I refer to here as the wisdom of crowds.

The Wisdom of Crowds, or Why Wasn’t I Consulted

The Wisdom of Crowds comes from James Surowiecki’s 2004 grandiosely titled book, The Wisdom of Crowds: Why the Many Are Smarter Than the Few and How Collective Wisdom Shapes Business, Economies, Societies and Nations. In the book, Surowiecki talks about a range of examples of how crowds of people can create important and valuable kinds of knowledge. Unlike human computation, the wisdom of crowds is not about highly structured activities. In Surowiecki’s argument, the wisdom of crowds is an emergent phenomena resulting from how discussion and interaction platforms, like wikis, enable individuals to add and edit each other’s work.

The wisdom of crowds notion tends to come with a bit too much utopian baggage for my tastes. I find Paul Ford’s reformulation of this notion particularly compelling. Ford suggests that the heart of this matter is that the web, unlike other mediums, is particularly well suited to answer the question “Why wasn’t I consulted.” It is worth quoting him here at length:

Why wasn’t I consulted,” which I abbreviate as WWIC, is the fundamental question of the web. It is the rule from which other rules are derived. Humans have a fundamental need to be consulted, engaged, to exercise their knowledge (and thus power), and no other medium that came before has been able to tap into that as effectively.

He goes on to explain a series of projects that succeed because of their ability to tap into this human desire to be consulted.

If you tap into the human need to be consulted you can get some interesting reactions. Here are a few: Wikipedia, StackOverflow, Hunch, Reddit, MetaFilter, YouTube, Twitter, StumbleUpon, About, Quora, Ebay, Yelp, Flickr, IMDB, Amazon.com, Craigslist, GitHub, SourceForge, every messageboard or site with comments, 4Chan, Encyclopedia Dramatica. Plus the entire Open Source movement.

Each of these cases tap into our desire to respond. Unlike other media, the comments section on news articles, or our ability to sign-up for an account and start providing our thoughts and ideas on twitter or in a tumblr is fundamentally about this desire to be consulted.

The logic of Why Wasn’t I Consulted is evident in one of my favorite XKCD cartoons. In Duty Calls we find ourselves compelled to stay up late and correct the errors of other’s ways on the web. In Ford’s view, this kind of compulsion, this need to jump in and correct things, to be consulted, is something that we couldn’t do with other kinds of media and it is ultimately one of the things that powers and drives many of the most successful online communities and projects.

Returning to the example from Galaxy Zoo, where the carefully designed human computation classification exercise provides one kind of input, the projects very active web forums capitalize on the opportunity to consult. Importantly, some of the most valuable discoveries in the Galaxy Zoo project, including an entirely new kind of green colored galaxy, were the result of users sharing and discussing some of the images from the classification exercise in the open discussion forums.

Comparing and Contrasting

To some extent, you can think about human computation and the wisdom of crowds as opposing polls of crowdsourcing activity. I have tried to sketch out some of what I see as the differences in the table below.

| Human Computation | Wisdom of Crowds | |

| Tools | Sophisticated | Simple |

| Task Nature | Highly structured | Open ended |

| Time Commitment | Quick & Discrete | Long & Ongoing |

| Social Interaction | Minimal | Extensive Community Building |

| Rules | Technically Implemented | Socially Negotiated |

When reading over the table, think about the difference between something like the Google Image Labler for human computation and Wikipedia for the wisdom of crowds. The former is a sophisticated little tool that prompts us to engage in a highly structured task for a very brief period of time. It comes with almost no time commitment, and there is practically no social interaction. The other player could just as well be computer for our purposes and the rules of the game are strictly moderated by the technical system.

In contrast, something like Wikipedia makes use of, at least from the user experience side, a rather simple tool. Click edit, start editing. While the tool is very simple the nature of our task is huge and open-ended, help write and edit an encyclopedia of everything. While you can do just a bit of Wikipedia editing, it’s open-ended nature invites much more long-term commitment. Here there is an extensive community building process that results in the social development and negotiation of rules and norms for what behavior is acceptable and what counts as inside and outside the scope of the project.

To conclude, I should reiterate that we can and should think about human computation and the wisdom of crowds not as an either or decision for crowdsourcing but as two components that are worth designing for. As mentioned earlier, Galaxy Zoo does a really nice job of this. The image label game is quick, simple and discrete and generates fantastic scientific data. Beyond this, the open web forum where participants can build community through discussion of the things they find brings in the depth of experience possible in the wisdom of crowds. In this respect, Galaxy Zoo represents the best of both worlds. It invites anyone interested to play a short and quick game and if they want to they can stick around and get much more deeply involved, they can discuss and consult and in the process actually discover entirely new kinds of galaxies. I think the future here is going to be about knowing what parts of a crowdsourcing project are about human computation and which parts are about the wisdom of crowds and getting those two things to work together and reinforce each other.

In my next post I will bring in a bit of work in educational psychology that I think helps to better understand the psychological components of crowdsourcing. Specifically, I will focus in on how tools serve as scaffolding for action and on contemporary thinking about motivation.

Leave a comment